Three IT security researchers from Technische Universität Berlin (TU Berlin) glitched Tesla’s driving assistant into activating a powerful “Elon mode” and were able to access the company’s secrets, Spiegel reported. Allegedly, all Tesla models are vulnerable to this attack.

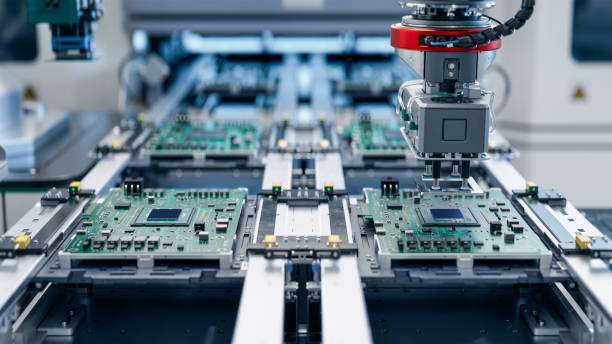

With tools that cost around 600 euros, TU Berlin students Christian Werling, Niclas Kühnapfel, and Hans-Niklas Jacob induced a short two-second voltage drop by 560 millivolts and rooted the ARM64-based circuit board of Tesla’s autopilot.

The voltage glitch enabled researchers to extract arbitrary code and user data from the system, including cryptographic keys and important system parts, allowing them to reconstruct how it works. Researchers even gained access to a video with GPS coordinates that had been deleted by the previous owner of the vehicle, as it was not overwritten.

German researchers have been able to understand which data Tesla collects to train its AI and which is disregarded. They even managed to activate the more powerful “Elon mode,” Spiegel writes.

The “Elon mode” is a secret hands-free full self-driving feature that previously hackers managed to discover. This executive mode allows Tesla vehicles to self-drive without any driver input or monitoring. This mode was discovered by @greentheonly in June 2023, who tested the mode and posted some clips on social media.

The hack could also enable premium features for free. Previously the same researchers at Blackhat demonstrated an infotainment AMD processor hack to enable free seat heaters.

Tesla’s “full self-driving” (FSD) capability is provided as an additional purchase of $12,000. However, it requires active driver supervision and does not make the vehicle autonomous. The FSD has been repeatedly criticized due to phantom braking or other incorrect decisions.

With their work, researchers wanted to explain Tesla’s safety architecture and gaps in its Autopilot system, raising concerns about how safe the cars really are.

“Despite using multiple cameras and Autopilot’s machine learning (ML) models, accidents persist and shape FSD reporting,” researchers said in their presentation.

They assume all Tesla vehicles are vulnerable to such an attack as they probably all use the same circuit board, even if the owner did not buy the driving assistance system. Researchers themselves were surprised that it was easy to get into Tesla’s secrets.

“What we showed today is that with the voltage fault injection attack, Tesla’s intellectual property could be threatened.”

However, the hack requires physical access to the circuit board, removing and reinstalling it without damage, and soldering skills. Therefore, such an attack would not be very practical outside the laboratory.